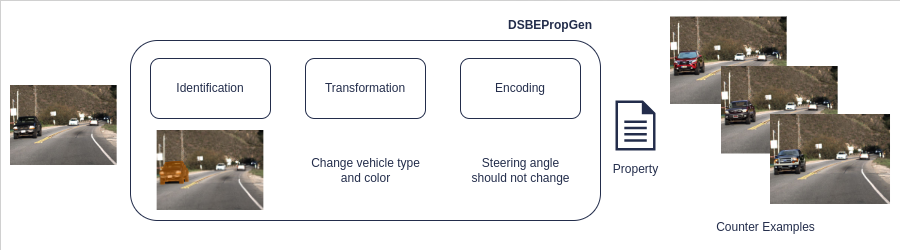

Deep Neural Networks (DNNs) that process images are being widely used for many safety-critical tasks, from autonomous vehicles to medical diagnosis. Currently, DNN correctness properties are defined at the pixel level over the entire input. Such properties are useful to expose system failures related to sensor noise or adversarial attacks, but they cannot capture features that are relevant to domain-specific entities and reflect richer types of behaviors. To overcome this limitation, we envision the specification of properties based on the entities that may be present in image input, capturing their semantics and how they change. Creating such properties today is difficult as it requires determining where the entities appear in images, defining how each entity can change, and writing a specification that is compatible with each particular V\&V client. We introduce an initial framework structured around those challenges to assist in the generation of Domain-specific Entity-based properties automatically by leveraging object detection models to identify entities in images and creating properties based on entity features. Our feasibility study provides initial evidence that the new properties can uncover interesting system failures, such as changes in skin color can modify the output of a gender classification network. We conclude by analyzing the framework potential to implement the vision and by outlining directions for future work.

Verification and Validation of Machine-Learned Models

Our group is currently leading work in the following areas:

Deeper Notions of Correctness in Image-Based DNNs: Lifting Properties from Pixel to Entities

Sponsors: This effort is supported by NSF Awards #2019239 and #2332991

Paper Link: https://felipetoledo4815.github.io/files/publications/FSE23-DSEB.pdf

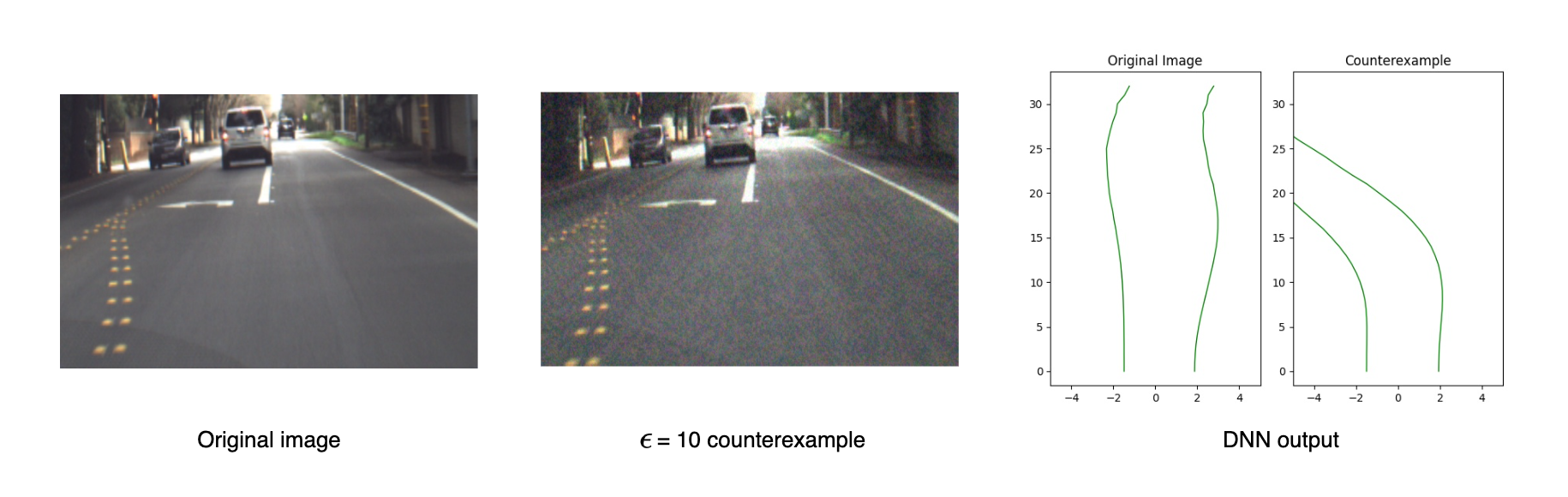

Determining Property Violations through Network Falsification: A Case Study of OpenPilot

Openpilot is an open source system to assist drivers by providing features like automated lane centering and adaptive cruise control. Like most systems for autonomous vehicles, Openpilot relies on a sophisticated deep neural network (DNN) to provide its functionality, one that is susceptible to safety property violations that can lead to crashes. To uncover such potential violations before deployment, we investigate the use of falsification, a form of directed testing that analyzes a DNN to generate an input that will cause a safety property violation. Specifically, we explore the application of a state-of-the-art falsifier to the DNN used in OpenPilot, which reflects recent trends in network design. Our investigation reveals the challenges in applying such falsifiers to real-world DNNs, conveys our engineering efforts to overcome such challenges, and showcases the potential of falsifiers to detect property violations and provide meaningful counterexamples. Finally, we summarize the lessons learned as well as the pending challenges for falsifiers to realize their potential on systems like OpenPilot.

Sponsors: This work was supported in part by NSF Award #1924777 and AFOSR Award #FA9550-21-1-0164.

Paper Link: https://dl.acm.org/doi/10.1145/3551349.3559500

Artifact Link: https://github.com/MissMeriel/openpilot-falsification

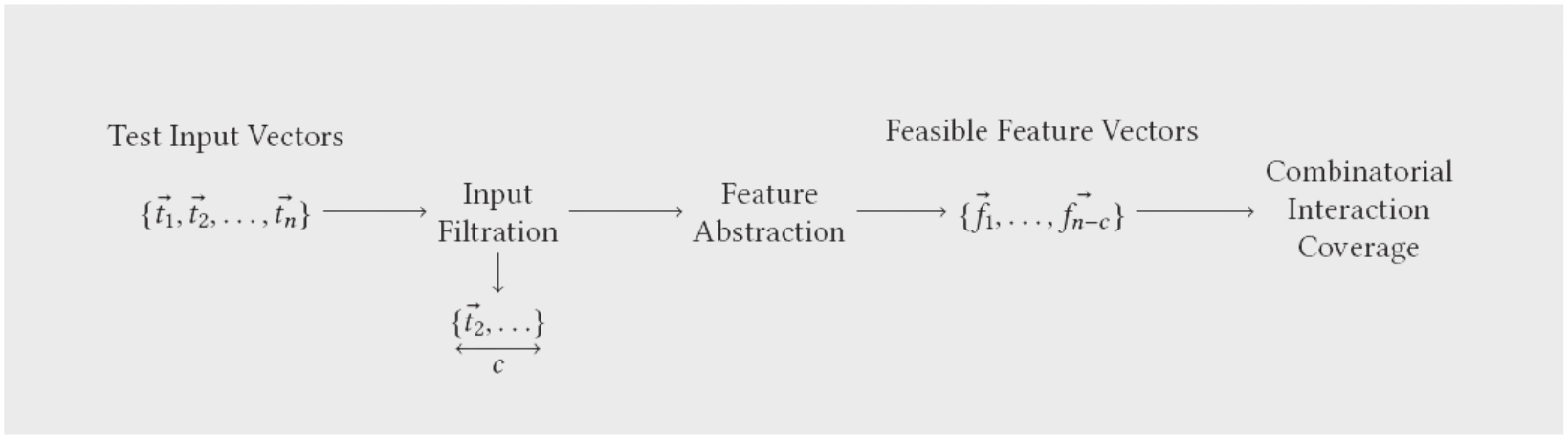

Input Distribution Coverage: Measuring Feature Interaction Adequacy in Neural Network Testing

Input Distribution Coverage (IDC) is a framework for measuring the test adequacy of neural networks. IDC rejects invalid inputs and converts the valid inputs into feature vectors, which represent the feature abstractions of the test inputs in a low-dimensional space. IDC applies combinatorial interaction testing measures over the space of the feature vectors to measure test coverage. Experimental studies demonstrate that the test adequacy measures calculated by IDC capture the feature interactions present in the test suites.

Sponsors: This effort is supported by NSF Awards #2019239 and #2129824, by The Air Force Office of Scientific Research under award #FA9550-21-0164, and by Lockheed Martin Advanced Technology Laboratories

Paper Link: https://dl.acm.org/doi/pdf/10.1145/3576040

Artifact Link: https://github.com/less-lab-uva/InputDistributionCoverage

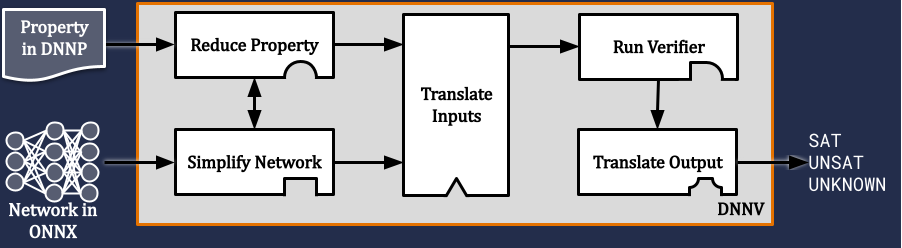

DNNV: Deep Neural Network Verification

DNNV is a framework for verifying deep neural networks (DNN). DNN verification takes in a neural network, and a property over that network, and checks whether the property is true, or false. DNNV standardizes the network and property input formats to enable multiple verification tools to run on a single network and property. This facilitates both verifier comparison, and artifact re-use.

Sponsors: This effort is supported by NSF Awards #1900676 and #2019239

Paper Link: https://arxiv.org/pdf/2105.12841.pdf

Artifact Link: https://github.com/dlshriver/DNNV

Distribution Models for Falsification and Verification of DNNs

DNN validation and verification approaches that are input distribution agnostic waste effort on irrelevant inputs and report false property violations. Drawing on the large body of work on model-based validation and verification of traditional systems, we introduce the first approach that leverages environmental models to focus DNN falsification and verification on the relevant input space. Our approach, DFV, automatically builds an input distribution model using unsupervised learning, prefixes that model to the DNN to force all inputs to come from the learned distribution, and reformulates the property to the input space of the distribution model. This transformed verification problem allows existing DNN falsification and verification tools to target the input distribution – avoiding consideration of infeasible inputs.

Sponsors: This effort is supported by NSF Awards #1900676 and #2019239

Paper Link: https://ieeexplore.ieee.org/iel7/9678507/9678392/09678590.pdf

Artifact Link: https://github.com/less-lab-uva/DFV-Artifact

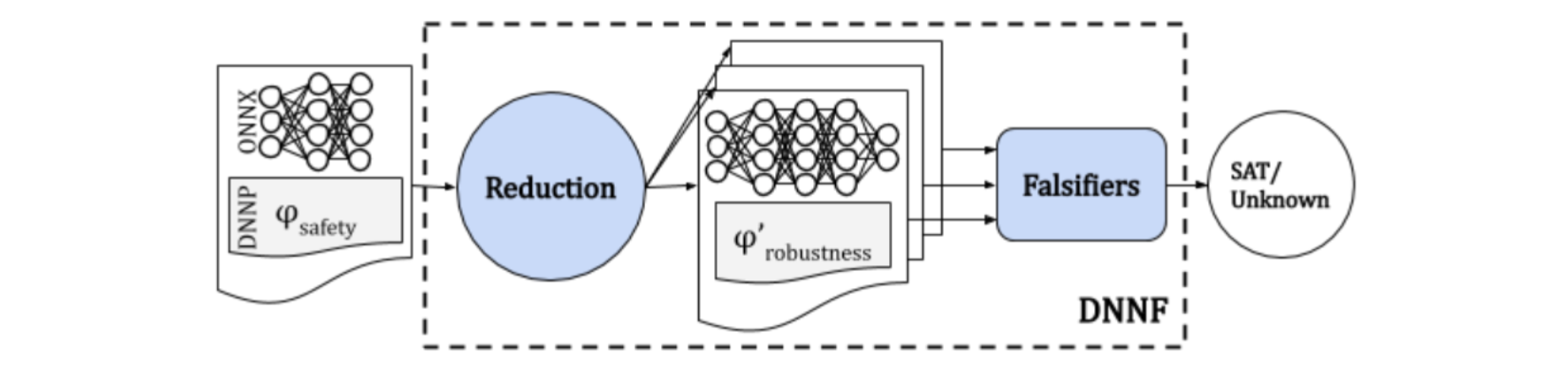

DNNF: Deep Neural Network Falsification

DNNF is a framework for falsification of deep neural networks (DNN). While many DNN verification techniques have been introduced in the past few years to enable the checking of DNN safety properties, these techniques are often limited in their applicability, due to simplifying assumptions about DNN structure or to high computational cost. Falsification is a complementary approach to verification that seeks only to find violations to a safety property. In the context of DNNs, adversarial attacks can be viewed as falsifiers for DNN local robustness properties. While these techniques often scale to large real-world DNNs, they are currently limited in the range of properties they can falsify. In Reducing DNN Properties to Enable Falsification with Adversarial Attacks, we introduce an approach for reducing a DNN and an associated safety property – a correctness problem – into an equivalid set of correctness problems formulated with robustness properties which can be processed by existing adversarial attack techniques. DNNF is the implementation of that approach.

Sponsors: This effort is supported by NSF Awards #1900676 and #2019239

Paper Link: https://dlshriver.com/assets/files/publications/ICSE21-DNNF.pdf

Artifact Link: https://github.com/dlshriver/DNNF

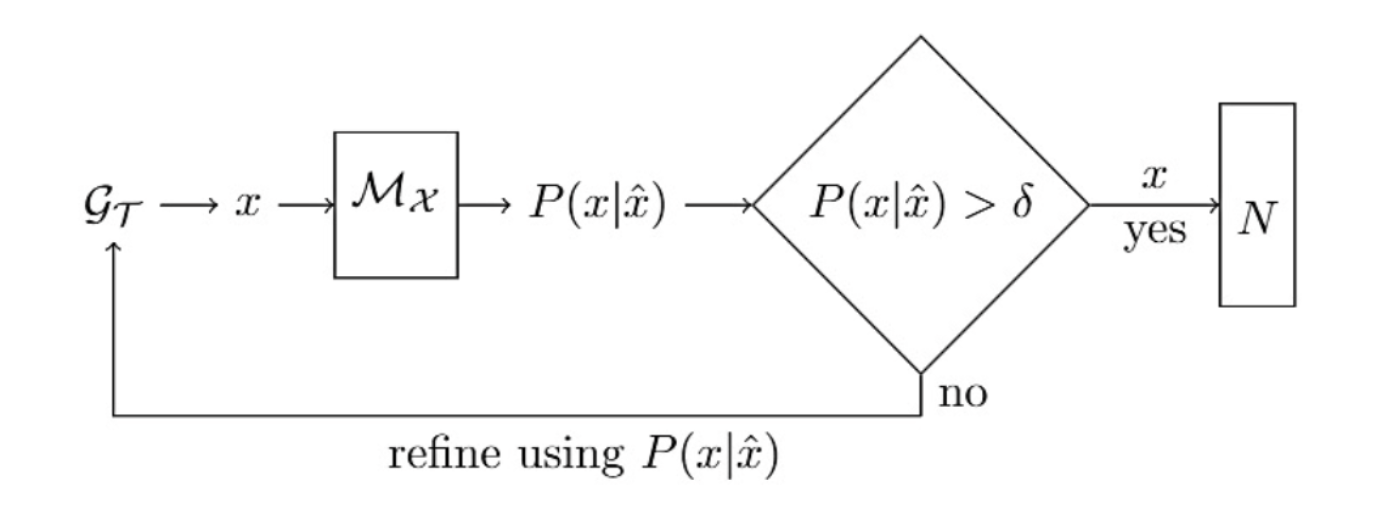

Testing Neural Networks with Generative Models

Our research involves developing techniques for testing DNNs using inputs that follow the training distribution of the DNN under test. Generative models can learn the distribution of the dataset they are trained on. This feature of the generative models is used for classifying test inputs as valid or invalid. The input classifier used in our study is a variational autoencoder (VAE) based algorithm that outputs the reconstruction probability of the input. The test generation technique is shown in the figure where 𝑀𝑥 is the VAE based input classifier. The test input generated by the test generator 𝐺𝑇 is passed through the input classifier which outputs the reconstruction probability of the input with respect to the input distribution estimated by the VAE. This probability can guide the 𝐺𝑇 in generating valid test inputs. We conducted a study to evaluate the test generation technique using DeepXplore as the baseline test generator for 8 DNN networks. The results indicate that the technique significantly increases the generation of valid test inputs and reduces the time to generate valid tests when compared to the baseline.

Sponsors: This effort is supported by NSF Awards #1900676 and #2019239

Paper Link: https://ieeexplore.ieee.org/iel7/9401807/9401950/09402100.pdf

Artifact Link: https://github.com/swa112003/DistributionAwareDNNTesting

GDVB: Systematic Generation of Diverse Benchmarks for DNN Verification

GDVB is a framework for generating benchmarks for DNN verification. GDVB leverages the idea of conbinatorial interaction testing in software engineering, as well as the combinations of the identified nine controlling factors that contributes to DNN verification performance. With a given seed DNN verification problem, it can generate a systematic and diverse benchmark that challenges DNN verification tools’ capabilities in various aspects. Refer to the paper and the tool’s website for more details.

Sponsors: This effort is supported by NSF Awards #1900676 and #1901769 as well as the U.S. Army Research Office Grant #W911NF-19-1-0054

Paper Link: https://link.springer.com/chapter/10.1007/978-3-030-53288-8_5

Artifact Link: https://github.com/edwardxu0/gdvb